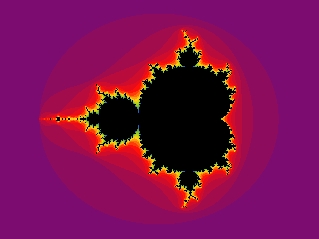

Fig. 1 The Mandelbrot Set

Fractal compression in intros

Invocation

"- Hi. I'm Turms.

- Hello, Turms.

- And, uh, well... I don't think I've ever written an intro.

- That's incredible. - Good on you, mate.

- I'm glad I got that off my chest."

(Paraphrasing Dory from 'Finding Nemo')

Killing zone

After such an introduction I hope you will not hold anything against me if I write a few words about algorithms that possibly may be used in your intros. But don't limit yourself to intros and use these techniques in other demo-scene productions as well. Maybe these methods are not 'off-the-shelf' solutions to apply in your production but I hope they will give you some fresh ideas. Possibly, now it is a good time to encourage you to read this article anyway. So, maybe I should mention that one of the early editions of Encarta Encyclopedia (published by M$) uses the fractal image compression for its entire graphics files. They claim to squeeze over 7000 photographs onto one CDROM (not to mention about an exhaustive collection of articles, seven hours of sound, 100 animations and 800 color maps). So far, I am quite sure the presented algorithms will be interesting for some of the Hugi readers.

By the way, have you seen Nemo? Outstanding graphics!

Preface

The algorithms I would like to cover in this paper are: fractal image compression and fractal volume compression. They are lossy compression methods. The purpose of this theoretical article is to bring closer these two algorithms to you but not to go into the implementation details, which could extend this paper to an enormous size. Hence, I will try to characterize possible applications of the methods rather than algorithms themselves. I hope, now you understand that before you start coding your own routines you will have to follow the references. Is it clear? Then we can take off now. :-)

Strange names

Probably I must explain a quite weird term 'fractal' for some of you. The first who gave a mathematical definition of it was Benoît B. Mandelbrot. He described a fractal as a set for which the Hausdorff-Besicovich (another strange term :-)) dimension strictly exceeds the topological dimension. In other words a fractal is a rough or fragmented geometric shape that can be subdivided in parts, each of which is (at least approximately) a reduced-size copy of the whole. These kinds of forms are generally self-similar and independent of scale. A tree and a fern is a prime example of natural fractals. Moreover, I am pretty sure that all of you have already seen demos with fast generation of the Julia and Mandelbrot Sets (Fig. 1). Such effects were quite popular in demos of early '90s. It was quite a serious challenge to generate one of these sets in real-time regarding their computational complexity. Even now it is hard to achieve real-time in high resolutions. Hence, it has been always a neat thing to implement. As far as I remember Robotnik by Rage shows one of these sets (dynamically zooming).

Fig. 1 The Mandelbrot Set

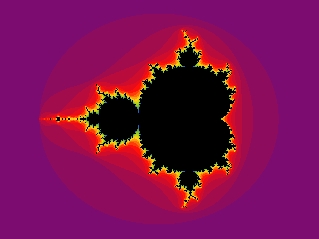

Also, some fading effects are based on 2D fractals. In this case, a generated escape-time fractal may be used to make a nice transition between two images or two scenes. The transition is based on a color value reached by one of the escape-time orbits (Fig. 2). On the other hand, you can simply blacken the screen with a dynamically growing fractal figure (using L-Systems). To finish this short introduction to fractals I recommend Liveevil by Mandula. It is a nice demo with fractal effects (including 3D fractals, a quaternion version of the Julia Set).

Fig. 2 A sample escape-time fractal

(it doesn't belong to the Mandelbrot or Julia Sets)

The smash time

Now, the time came to look more thoroughly at two-dimensional fractal compression, which simply contributes to the second algorithm I describe further in the text (the volumetric compression). Using this method we can compress images with ratios exceeding 10,000:1, which can be pretty useful in putting graphics into intros. Practically, such compression can be only achieved with certain types of pictures and human assistance. Therefore an image with a girl (the favorite theme of most demo-scene 2D artists :-)) may not compress equally well (the average ratio for such pictures might be about 25:1 to 80:1 while still looking 'pretty good'). Luckily for us, there is one kind of images that compress with high ratios (much over 100:1 with acceptable quality). They are textures! I know, one can say that he can generate most of the textures without wasting the space for them. I must perfectly agree with this claim. But try to think different. Imagine that you can store a few priory prepared textures in approximately 6 KB (+ decompression procedure) with ratio about 100:1 to even 800:1 [7]. In such textures you can put nice clouds (digital pictures), bricks, trees, mountains, outer space or even human faces. The decompression procedure is fairly simple and fast. This is one of the advantages over the JPEG 2000 algorithm. Moreover, the compressed images may be scaled to any size. Of course, this is done with a little bit cheating but the final result is counting which is not too bad.

The idea of the algorithm strictly comes from self-similarity. That's the reason why textures compress so well - they are mostly made of self-similar parts. Fractal image compression is based on Iterated Function Systems (shortly IFS, here are fractals 'hidden'), which are covered in many books [3] and therefore I will discuss them very shortly.

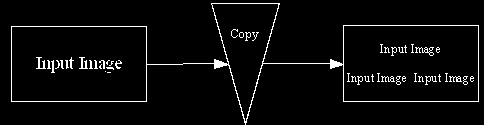

IFS come from the idea of Multiple Reduction Copying Machine depicted below (Fig. 3).

Fig. 3 Multiple Reduction Copying Machine

As you can see the copier has three lenses that reduce the size of the input image by one half and translates them to the three different positions. The whole machine works in an infinite feedback loop (note: most of the fractals are iterated in such loops) where the output of one stage is the input to the next. To manage the work of the copier every lens represents a contractive affine transformation that consists of scaling, rotating, shearing, and translation. One of the most important features of the IFS is that it can be reverse-engineered from the original image, like the sample fern (Fig. 4). This is exactly what fractal image compression is assumed to do.

Fig. 4 IFS Fern sample,

Image taken from: http://www.geocities.com/agkking/cppcode/fractals/fern.htm

Blah, blah, blah

Now I would like to draw your attention to the algorithm itself. Divide an image into blocks (e.g. 8x8) and then notice that many of them are quite similar to the others. Then using scaling, rotation, mirror flips and changing the luminance you can try to find the best matches for every block. Write all the transformation with least necessary bits and compress them with Huffman algorithm. Now, you have a compressed image.

That was only a brief introduction to the idea of fractal image compression. Surprisingly, it is almost as simple as it looks. The one thing you should noticed is that the process of finding similar blocks may last almost forever and therefore compression may take some time (decompression is fast and definitely may be performed in real-time). In real life, the image is divided into domain blocks and range blocks, where domain block are larger than range ones. Domain block may overlap one another but range blocks may not. Domain blocks are also called destination regions while range blocks are described as reference regions (more friendly names). The search of similar block is performed with contractive transformations. A domain block is mapped onto a range block using 'shrinking operations'.

Fig. 5 A domain block and range blocks

Figure 1 depicts an image divided into 4 range blocks and 1 domain block (enclosing the whole image). The grayed reference region is similar to the destination one (using a scaling operator). To assure yourself it is true just enlarge a small region by 2 and you will gain the domain block. The search process may last forever so you have to limit your search anyway. However, you should always try to choose the best matches between domain and range block. The choice is based on an operator (a resulting error) that returns the quantity of differences between the destination region and the reference one. Then you simply pick up the region with the smallest error. As the resulting operator you can choose mean squared error.

In most implementations the domain blocks are twice the size of the range blocks. So the spatial contraction is constant and can be hard coded into the decompression program. What needs to be stored are:

Transformation No. of Bits (1) No. of Bits (2)

x position of domain block 8 6

y position of domain block 8 6

luminance scaling 8 5

luminance offset 8 6

symmetry indicator 3 3

Total: 35 26

In the first scheme, a byte is allocated to each number except for the symmetry indicator.

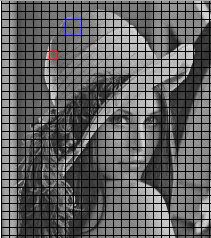

Fig. 6 Famous Lena divided into domain (red rectangle)

and range blocks (blue rectangle)

3D girls (oops I did it again...)

Fractal volume compression is an extension of the algorithm discussed above. This method is used to compress volumetric data. Mind that you do not compress meshes (triangles and textures data) but volumetric data (points in 3D space). Hence, to render a solid with texture you will have to apply one of the iso- surface algorithms on the decompressed data and generate your own texture coordinates. The obtained average ratio for such data is about 18:1 to 40:1, which is enough to insert a complex object into your exe file. Surly, one can say that generating objects is a far better idea - and honestly - I must agree again. But not everything may be generated.

Remember that you can always increase the ratio in favor of quality. The method itself is described as follows in [6]:

Just as fractal image compression encodes images with block-restricted contractive self-transforms, fractal volume compression likewise encodes volumes. The design issues for creating a fractal block coding system for volumes are direct extensions of those used in fractal image compression. The process partitions the volume into both fine range blocks and coarse domain blocks, and then finds for each range blocks the transformed domain block that best matches it.

There is nothing surprising in the idea itself but the complexity of the algorithm increases with higher dimensionality.

There's nothing left to say anymore (but I will put in my two cents - a word about surfaces).

Currently, one of methods to input 3D objects in intros are to save them in low-poly versions. Then, in the application, an "inverse LOD" (as I called it...) may be applied to enhance the mesh details and a surface over sampled points is created. I think that volumetric fractal compression may give better effects.

Personally, I recommend using one of the displaced subdivision methods in favor of well known the Marching Cubes algorithms to obtain solid objects. These kinds of algorithms may give you a smoother surface over sampled data with lower count of polygons. Moreover, you can even denser a mesh without having necessary sample points.

Epitaph

Did I tell you that the fractal image compression is patented? Unfortunately, it is. Still, you can introduce some innovations to the algorithm to make it free to use. If you think that is not possible you are possibly wrong. There are already algorithms that get around the patent. Michael Barnsely - the man that stands behind the method - patented only one approach of dividing image into domain and range blocks (as far as I know...). He uses rectangles. With some effort, you can try to use triangles or other basic shapes in dividing image into block to make the algorithm looks different.

Finally, there are many extensions to the very basic fractal image compression algorithm presented in this paper. Some of these methods improve the algorithm much. On the other hand, jpeg compression has already made a huge step with implementation of JPEG 2000. The hybrid version of Wavelet Fractal Image Compression has been already proposed.

Acknowledgments

I would like to thank to Hopper/Squoquo for his review of this article. So, at least one programmer with intro-coding background has read it :-). His comments and remarks helped me in cleaning the paper up. Also, I would like to thank to Adok/Hugi for his help, patience ;-) and English grammar corrections.

References

1. www.google.com

2. Peitgen, Jurgens, Saupe, Fractals for the Classroom, Springer (1992) - highly recommended!

3. M. Barnsley, Fractal Image Compression

4. M. Barnsley, Fractals Everywhere, Academic Press (1989)

5. comp.compression - FAQ of the group

6. W. Cochran, J. Hart, P. Flynn, Fractal Volume Compression

7. S. Nikiel, Reasearch in Fractal Image Compression (http://www.issi.uz.zgora.pl/~snikiel/)